Deepfake Wire Fraud: The Growing Liability for Data Centers

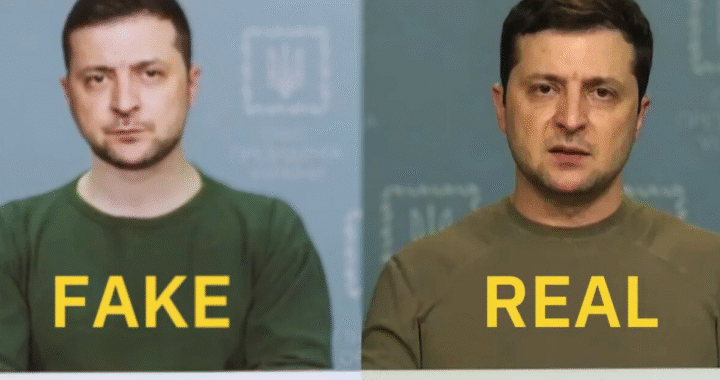

A manipulated video purporting to show Ukrainian President Volodymyr Zelenskyy circulated online in 2022, highlighting growing concerns over the use of deepfakes in scams and disinformation campaigns.

A blockbuster deepfake scam in Hong Kong last year led an employee at a multinational firm to transfer HK$200 million (US$25.6 million) during a video call featuring lifelike imitations of their CFO and senior executives. The fraud, conducted over 15 transactions, was uncovered only days later, prompting a cross-jurisdictional investigation.

This is not a sci-fi scenario – it’s a watershed moment testing our ability to defend against the next evolution of social engineering.

— Shannon Murphy, Trend Micro

Government & Financial Sectors Are Prime Targets

Financial institutions are under growing regulatory pressure. FINRA, a U.S. watchdog, has warned that GenAI is being used to impersonate financial executives and falsify sensitive documents.

Government agencies are also being targeted. In Hong Kong, deepfake fraudsters have reportedly used face-swapping to open loan and bank accounts, laundering illicit funds. Authorities documented over 20 such incidents by early 2024.

These sectors process high-value, high-frequency transactions. Even minor gaps in biometric or voice authentication can result in substantial losses.

Hidden Liability for Data Centers

Data centers enable these mission-critical operations. As enterprise and government contracts increasingly mandate synthetic-media defenses, data center operators risk:

- Contractual penalties or SLA forfeitures

- Insurance exclusions or premium hikes tied to deepfake risks

- Client attrition due to perceived inadequacy in synthetic-fraud resilience

Deepfake Fraud: A Broader Trend

The Hong Kong case is not isolated. A UK-based multinational lost £20 million (US$25 million) in a similar attack involving a deepfake video call. In another case, a CFO in New Zealand was tricked into wiring US$243,000 after a single AI-generated voice call.

U.S. analysts warn that financial sector losses due to deepfake fraud may reach US$40 billion by 2027, up from US$12 billion in 2023.

Vendor Checklist for Data Centers

| Control Area | Description |

| Forensic deepfake detection | Use audio/video analytics to flag anomalies – e.g., lip-sync mismatches, audio latency, voice stutter |

| Biometric & liveness checks | Integrate facial, voice, and behavioral biometrics with real-time liveness detection during access attempts |

| Dual-channel bootstrap | Require secondary verification (e.g., callback or SMS) for high-risk transactions or credential updates |

| Contractual safeguards | Include synthetic-media fraud indemnities and detection requirements in SLAs |

| Sector-specific training | Train teams on deepfake typologies and escalation protocols for public sector and financial client workflows |

| Insurance alignment | Ensure cyber policies explicitly cover synthetic fraud; anticipate new underwriting terms |

Why It Matters

Deepfake scams are becoming more sophisticated, frequent, and financially damaging. For data center operators, the risk is no longer abstract. Trust infrastructure – not just uptime metrics – is now a strategic differentiator.

To retain high-trust clients in government and finance, data centers must embed synthetic-fraud protections directly into their technical stack, training, insurance, and contracts. The age of believing what we see is over. The age of verifying it – continuously and contractually – has begun.

The Rising Cost of Complacency: Asia’s Data Centers Face Critical Economic Exposure

The Rising Cost of Complacency: Asia’s Data Centers Face Critical Economic Exposure  Data Centers Confront Rising Threat: When Physical Controls Become Cyber Targets

Data Centers Confront Rising Threat: When Physical Controls Become Cyber Targets  Time to Market – Why Execution, Not Strategy, Is the Philippines’ Digital Pivot

Time to Market – Why Execution, Not Strategy, Is the Philippines’ Digital Pivot  Redefining the Grid – Why the Power System Is the New Data Center Frontier

Redefining the Grid – Why the Power System Is the New Data Center Frontier  Deal Architecture 2.0 – Execution Risk Outpacing Pure Capital Availability

Deal Architecture 2.0 – Execution Risk Outpacing Pure Capital Availability  Cross-Border Complexity – How Hyperscale Is Forcing Horizontal Integration

Cross-Border Complexity – How Hyperscale Is Forcing Horizontal Integration  Deepfake Wire Fraud: The Growing Liability for Data Centers

Deepfake Wire Fraud: The Growing Liability for Data Centers